Europe's Digital Services Act (DSA) is Approved: What You Need to Know

15 minute read

October.28.2022

The Digital Services Act is now in full effect as of February 2024. Our latest update covers five takeaways for businesses to consider as they navigate the DSA.

The final text of Europe’s (EU) Digital Services Act (“DSA”) was recently approved by the Council of the EU Member States. That means that the final text of the regulation should be published in the Official Journal of the European Union in November, with its provisions will take effect twenty days following publication. Companies subject to the DSA’s requirements will have a fifteen-month grace period to comply, except for specifically designated “Very Large Online Platforms” and “Very Large Online Search Engines”, subject to a shorter compliance timeframe (for more details, see below).

This update provides an explanation of the DSA and explains which organizations must comply with its provisions, and a summary of the key obligations that must be respected, in accordance with how an organization is classified under the DSA.

If your organization has an online presence that targets EU users, it may be subject to the terms of the DSA.

Quick Summary

What Is the DSA?

The DSA updates and builds on the eCommerce Directive 2000/31/EC. Its dual objective is the creation of a EU digital space where the rights of consumers and professional users are protected, and the establishment of a level playing field that will foster growth and innovation.

To achieve these objectives, the DSA imposes new obligations on intermediary service providers and online search engines to identify “illegal online content” and adopt measures to prevent its dissemination. The DSA also contains rules, as described in more detail below, regarding mandatory information to be provided to both consumers and professional users, in the service provider’s terms of use.

Importantly, the DSA is supplemental to and does not replace other EU and national legislation that imposes rules regarding online content, including:

- Directive on Copyright in the Digital Single Market 2019/790

- Audiovisual Media Services Directive 2010/13/EU

- Regulation on addressing the dissemination of terrorist content online 2021/784 (effective from 7 June 2022)

What Businesses Are Impacted?

- Online Service Providers: Online service providers that target EU users should assess whether they are a provider of “intermediary services” and if so, which of the DSA obligations will be applicable to their business.

- Intermediary Service Providers That Are Online Platforms: Intermediary service providers in the form of “online platforms” are particularly impacted since most of the obligations in the DSA apply to online platforms. Such platforms will need to implement policies, internal processes, and new functionalities, in order to comply. The so-called Very Large Online Platforms (VLOPs) impacted by the DSA are already taking steps to implement their compliance.

- Online Search Engines: These are defined as digital services that allow users to input queries in order to perform searches of websites. DSA obligations applicable to the VLOPs also apply to Very Large Online Search Engines (VLOSE).

- Advertisers: Advertisers should also take note of the new transparency obligations that apply to intermediaries in relation to the ads displayed on their services.

Many of the obligations require the implementation of policies and internal processes designed to capture information for subsequent use and disclosure. These requirements are complex and could require organizations to redesign back-end systems to comply, so organizations subject to the DSA would be well-advised to begin work on such policies and processes sooner, rather than later.

Deeper Dive – An Overview of the DSA

1. Application

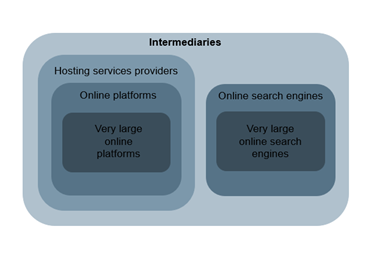

The DSA has a particular structure in terms of the applicability of its obligations as shown in the graphic below (inspired by the European Commission). All the law’s obligations apply to providers “intermediary services,” which include “hosting services, including online platforms” and “online platforms,” which in turn include , “very large online platforms" and “very large online search engines”.

Intermediaries Under the DSA Include:

- Mere conduit services such as internet services providers.

- Caching services such as cloud services providers offering automatic, intermediate and temporary storage of information, for the sole purpose of making the information's onward transmission more efficient.

- Hosting services which are a storage of information services (e.g., cloud services providers, webhosting). Hosting services include:

- Online platforms: Which are hosting services that store and disseminate information to the public, at the request of service users, as their primary activity (e.g., marketplaces, app stores, collaborative economy platforms and social media platforms). Providers of hosting services that disseminate such information as a merely or ancillary service feature will not be considered online platforms (for example, the comments section in an online newspaper).

- VLOPs: The very large online platforms are those online platforms that provide for at least four consecutive months of their services to a number of average monthly active recipients of the service in the Union equal to or higher than 45 million.

- Online Search Engines are defined as intermediary services and may also be designated as Very Large Online Search Engines (“VLOSE”) according to the same criteria as the VLOPs.

2. Territorial Scope

The DSA applies to intermediary services that are provided to users that are established or have their residence in Europe, without regard to the where the intermediary is established. Non-EU based intermediaries that have EU-based users will therefore be expected to comply.

3. Intermediary Liability

The eCommerce Directive enshrined the principle that intermediaries shall not be liable for information transmitted through its service, provided it was not actively involved in the transmission and/or it acted to remove or disable access to the information upon receiving notice.

The DSA retains this exemption from liability for intermediaries, with slight modifications, but imposes on hosts (and the subset categories of online platforms and very large online platforms) a set of due diligence requirements in relation to illegal content, as described below. The DSA maintains that intermediary service providers do not have a general monitoring obligation in relation to the information that they transmit or store.

4. Obligations

A. Applicable to All Intermediaries [Article 8 et seq.]

Intermediary service providers will be required to do the following:

- Act against items of illegal content (e.g., take them down) and/or provide requested information about individual service recipients upon receipt of a duly issued order by the relevant national authority (the DSA specifies the conditions to be satisfied by such orders).

- Identify a single point of contact within the organization who will be the point of contact for national authorities. Intermediaries that do not have an establishment in the EU will have to appoint a legal representative in a Member State where the intermediary offers its services (there may be a possibility of collective representation for micro, small, and medium enterprises).

- Comply with specific obligations in relation to the form and content of the intermediary service terms and conditions. For instance, the terms must be fair, non-discriminatory, and transparent, and must include information regarding how to terminate services, restrictions imposed on the delivery of services, and also regarding the use of algorithmic tools for content-moderation. Details of rules of internal complaints handling systems should also be disclosed.

- For services provided to minors or pre-dominantly used by them, express the terms in easily understandable language.

- Protect that anonymity of users except in relation to traders.

- Publish an annual transparency report on any content moderation then engaged in, including specified information such as the number of orders received from Member States’ authorities, response times, and information about the own-initiative content moderation practices of the service, including the use of automated tools and the restrictions applied, and information about the training and assistance provided to moderators. (This obligation does not apply to micro or small enterprises that do not qualify as very large online platforms). These obligations, and others, will require the implementation of specific internal processes in order to capture the required information.

B. Applicable Only to Intermediaries That Are Hosting Services, Including Online Platforms [Article 14 et. seq.]

- Hosting services shall have a notification mechanism allowing the signaling of content considered by a user considers to be illegal content. The mechanism must be designed to facilitate sufficiently precise and substantiated notices to permit the identification of the reported content.

- ‘Illegal content’ means any information or activity, including the sale of products or provision of services which is not in compliance with EU law or the law of a Member State, irrespective of the precise subject matter of that law;

- If a notice containing the required information is received, the hosting service will be deemed to have actual awareness of the content and its potential illegality (which has implications for the service’s liability).

- Hosting services must provide a statement of reasons to the user if their content is disabled or removed or if services are suspended. This explanation, must contain certain information, including the facts relied upon and a reference to the legal ground relied upon, or other basis for the decision if it was based on the host’s terms and conditions. However, law enforcement authorities may request that no explanation is provided to users.

- There is a positive obligation to alert law enforcement or judicial authorities if the host suspects that a serious criminal offence involving a threat to life or safety of persons is taking place or is planned.

- The anonymity of the content reporter is to be protected, except in relation to reports involving alleged violation of image rights and intellectual property rights.

- The transparency reports prepared by hosting services will have additional information, including the number of reports submitted by trusted flaggers and should be organized by type of illegal content concerned, specifying the action taken, the number processed by automated means and the median response time.

C. Applicable Only to Hosting Services That Are Online Platforms [Article 16 et seq.]

- The obligations in this section do not apply to micro or small enterprises, except if they qualify as very large online platforms. Intermediary services may apply to be exempted from the requirements of this section of the DSA.

- Online platforms shall provide an appeal process against decisions taken by the platform in relation to content that is judged to be illegal or in breach of the platform’s terms and conditions. The relevant user will have six months to appeal the decision. Decisions shall not be fully automated and shall be taken by qualified staff.

- Users will be able to refer decisions to an out-of-court dispute settlement body certified by the Digital Services Coordinator of the relevant Member State. Clear information regarding this right shall be provided on the service’s interface.

- Content reported by trusted flaggers shall be processed with priority and without delay. An entity may apply to the Digital Services Coordinator to be designated as a trusted flagger, based on criteria set out in the DSA.

- The suspension of users, for a reasonable period of time, is permitted if they repeatedly upload illegal content, after issuing a prior warning. Online platforms shall also suspend the processing of notices and complaints from users that repeatedly submit unfounded notices and complaints.

- Online platforms are required to ensure that their services meet the accessibility requirements set out in the EU Directive 2019/882, including accessibility for persons with disabilities, and shall explain how the services meet these requirements.

- There is a specific prohibition applicable to online platforms in relation to the use of “dark patterns”. The European Commission may issue further guidance in relation to specific design practices. The prohibition does not apply to practices covered by the Directive concerning unfair business-to-consumer practices, or by the GDPR.

- To ensure the traceability of traders (i.e. professionals that use online platforms to conduct their business activities), online marketplaces shall only allow traders to use their platform if the trader first provides certain mandatory information to the platform, including: contact details, an identification document, bank account details, details regarding the products that will be offered. Online platforms shall make best efforts to obtain such information from traders that are already using the platform services within 12 months of the date of coming into force of the DSA.

- A trader who has been suspended by an online platform may appeal the decision using the online platform’s complaint handling mechanism.

- Online platforms that allow consumers to conclude distance contracts with traders through their services shall design their interface so as to enable traders to provide consumers with the required pre-contractual information, compliance and product safety information. Traders should be able to provide clear and unambiguous identification of their products and services, any sign identifying the trader (e.g. a logo or trademark), and information concerning mandatory labelling requirements.

- Online platforms shall make reasonable efforts to randomly check whether the goods and services offered through their service have been identified as being illegal. If an online platform becomes aware that an illegal product or service has been offered to consumers it shall, where it has relevant contact details or otherwise by online notice, inform consumers of the illegality and the identity of the trader, and available remedies.

- To promote online advertising transparency, online platforms shall ensure that service users receive the following information regarding online ads: that the content presented to users is an advertisement, the identity of the advertiser or person that has financed the advertisement, information regarding the parameters used to display the ad to the user (and information about how a user can change those parameters).

- Targeting techniques that involve the personal data of minors or sensitive personal data (as defined under the GDPR) is prohibited.

- Online platform providers shall provide users with functionality that allows them to declare that their content is a “commercial communication” (i.e. an advertisement / sponsored content).

- Online platforms have transparency obligations regarding any recommender system that is used to promote content. The online platform must disclose the main parameters used, as well as options for the recipient to modify or influence the parameters.

D. Applicable Only to Online Platforms Which Are Very Large Online Platforms and to Very Large Online Search Engines [Articles 25 et seq.]

- The obligations in this section apply only to online platforms and online search engines that provide their services to 45 million or more monthly active users, calculated in accordance with a methodology to be set out in further legislation. The European Commission will designate the online platforms and online search engines that qualify and the list will be published.

- VLOPs and VLOSE must publish their terms and conditions in the official languages of all Member States where their services are offered (this is often a requirement of national consumer protection law as well).

- VLOPs and VLOSE shall carry out (and in any event before launching a new service), an annual risk assessment of their services. The risk assessment shall take into account in particular risks of: dissemination of illegal content, negative effects for the exercise of the fundamental rights, actual or foreseeable negative effects on civic discourse and electoral processes and public security, or in relation to gender-based violence, public health, minors and physical and mental well-being. VLOPs and VLOSE shall consult with user representatives, independent experts and civil society organisations.

- VLOPs and VLOSE must implement mitigation measures to deal with these system risks. The DSA lists measures that might be adopted.

- VLOPs and VLOSE shall have independent audits carried out at least once a year, by independent firms, to assess their compliance with the DSA requirements and any commitments undertaken pursuant to a code of conduct. The DSA imposes certain conditions on the firms that shall conduct such audits (e.g. they shall be independent and free of conflicts of interest).

- VLOPS and VLOSE may be required by the Commission to take certain specified actions in case of a crisis, including conducting an assessment to determine whether the service is contributing to the serious threat and to adopt measures to limit, prevent or eliminate such contribution.

- VLOPs that use recommender systems must provide at least one that is not based on profiling and must provide users with functionality to allow them to set their preferred options for content ranking.

- Additional advertising transparency obligations are applicable, requiring the publication of information regarding the advertisements that have been displayed on the platform, including whether the advertisement was targeted to a group, the relevant parameters and the total number of recipients reached. The information should be available through a searchable tool that allows multicriteria queries.

- VLOPs and VLOSE are required to share data with authorities, where necessary for them to monitor and assess compliance with the DSA. Such information might include explanations of the functioning of the VLOPs algorithms. The regulator may also require that VLOPs allow “vetted researchers” (those that satisfy the DSA’s requirements) to access data, for the sole purpose of conducting research that contributes to the identification and understanding of systemic risks.

- VLOPS and VLOSE shall appoint a compliance officer responsible for monitoring their compliance with the DSA.

- VLOPS and VLOSE shall pay the Commission an annual supervisory fee to cover the estimated costs of the Commission (to be determined).

5. Enforcement and Sanctions [Articles 38 et seq.]

A. Digital Services Coordinator - Designation and Powers

- Each Member State shall designate one or more competent authorities as responsible for the application and enforcement of the DSA, and one of these authorities shall be appointed by the Member State as its Digital Services Coordinator. This Digital Services Coordinator will be the main enforcement authority. For non-EU based intermediaries, the competent Digital Services Coordinator will be located in the Member State where these intermediaries have appointed their legal representative. If no legal representative has been designated, then all Digital Services Coordinators will be competent.

- Digital Services Coordinators are granted investigation and enforcement powers—in particular the power to accept intermediary services’ commitments to comply with the DSA, order cessation of infringements, impose remedies, fines, and periodic penalty payment.

- Users have the right to lodge a complaint against providers of intermediary services alleging an infringement of the DSA with the Digital Services Coordinator of the Member State where the recipient resides or is established.

B. Sanctions

- Temporary access restrictions. Where enforcement measures are exhausted, and in case of persistent and serious harm, the Digital Services Coordinator may request that the competent judicial authority of the Member State order the temporary restriction of access to the infringing service or to the relevant online interface.

- Fines. Sanctions shall be “effective, proportionate and dissuasive”. Member States shall ensure that the maximum number of penalties imposed for a failure to comply with the provisions of the DSA shall be 6% of the annual worldwide turnover of the intermediary or other person concerned. The maximum amount of a periodic penalty payment shall not exceed 5% of the average daily turnover of the provider in the preceding financial year per day.

What's Should Companies Be Doing Now

Online service providers should be making the assessment as to whether they are subject to the DSA, based on the nature of their services and taking into account both the categories of intermediary covered by the regulation and the markets in which their services are available. Once a service provider has determined that the DSA is applicable to their business, it will be necessary to determine which of the DSA obligations are applicable.

If you have any questions, please do not hesitate to contact our team.